SonoTexto: live coding with sound recordings

by Hernani Villaseñor

In this entrance I describe the SupeCollider’s Class SonoTexto as well as the artistic concept behind it. SonoTexto allows to record and play sound environment, or that produced by instruments, of a few seconds to use them as sound material in a live coding situation. This Class is part of a series of sound pieces and technological objects I am doing in the UNAM’s Graduate Program of Music in Mexico. These pieces start from the question: How computer music practices, as live coding, built its relationship to technological development?

Technology development

SonoTexto consists of two documents: a SuperCollider Class and a script of SynthDefs. The first one describe three methods: 1) .boot activates the object and calls the synthdef script; 2) .rec records sound data in the buffers; and 3) .write writes the content of the buffers to hard disk if you want to keep it. The synthdef’s script contains four buffers that allocate sound data, four synthdefs to record the data to the buffers and four synthdefs to reproduce them. It is possible to write the data allocated in buffers, as a sound file, into a folder. The sound is recorded with the computer’s microphone or one connected to a soundcard. In this video you can see how it works with SuperCollider Patterns.

Artistic practice

The idea is to record and play short sounds, as the acoustic environment or the sound produced by acoustic instruments, to use them as sound material during a live coding performance. In the first case, small fragments of sound environment of the space, where the live coding take place, are recorded and then modified with different approaches in SuperCollider program as Patterns, Routines or ProxySpace. In this case, the microphone captures a moment of sound environment; this record contains sounds that happen in the space and the acoustics of the space itself. When the recorded sound is reproduced, the acoustics of the room or hall reacts to its own sound, that is to say, the shape of the space is defined by its own source. A series of sound layers build the sound of the improvisation, first the acoustic environment, then the digitalized sounds of the sound environment and finally a few processed sounds that are incorporated to the whole output sound. In this case the sound of the space and the sound coming from the speakers constitute the output of the performance.

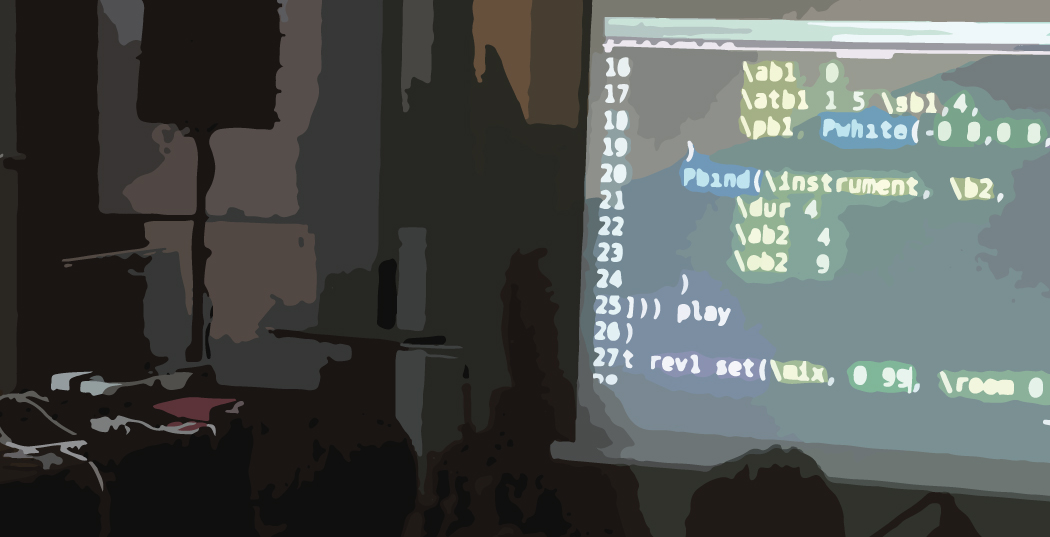

The example to the right shows a performance in Centro Cultural el Rule in Mexico City performed 26th June 2019. In this performance I used SonoTexto to capture the sound environment; the recorded sound was modified using patterns, and processed with the Class TecnoTexto, another piece of code of my research.

A second case is to capture the sound produced by the instruments of an improvisation ensemble, to reproduce, modify and process it during the improvisation. The computer returns a sound that is incorporated to the improvisation ensemble, generating and hybrid sound built by the relationship of the acoustic source and its digital modified and processed version.

The example below shows the piece “Juegos de improvisación” written by Nefi Dominguez (2016) and performed by Aarón Escobar, Diego Villaseñor, Juan Carlos Ponce and me on 3th May 2019 at Music Faculty UNAM. This example is a rule-based improvisation, the score of the piece proposes multiple gestures to be interpreted by any instrument. In my case I use the computer with SuperCollider program controlling sound recordings and synthesis with patterns.

Using SuperCollider to make music entails the use of objects and classes that comes by default with the program. A first step to compose, play or live coding is to understand how the software functions. From a creative perspective, users usually make music at high-level abstraction because is easy to express musical ideas, but if we explore lower programming levels of our programs, as artistic practice, we can build a relationship to the technology while start to open black boxes not only as a technological matter but as aesthetic one.

SonoTexto can be download from this repository:

https://github.com/hvillase/sonotexto

About the author

Hernani Villaseñor is a Mexican musician interested in sound, code and improvisation. He is currently a PhD student at the Music Graduate Program of the National Autonomous University of Mexico. His current research is about the implications of writing source code in different levels and layers to produce music and sound. He is also interested in artistic research and the relation of art and technology. As a musician he performs and improvises computer music with source code as interface, computer art practice known as live coding in a range of styles from techno to experimental sound. He has collaborated with different artists in the field of cinema, experimental video, photography and installation and is a member of the laptop band LiveCodeNet Ensamble. His has performed in many venues and participated in diverse conferences in countries of America and Europe. As an organizer he has co-organized three international symposiums dedicated to music and code called /*vivo*/ and many concerts of music and technology for the Centro Multimedia CENART in Mexico. He has also taught sound and computer music in Universidad del Claustro de Sor Juana and Music Faculty at UNAM.

www.hernanivillasenor.com